Lab 7: Neural Nets

From 6.034 Wiki

(→Backward propagation) |

(→Backward propagation) |

||

| Line 51: | Line 51: | ||

Returns a tuple containing: | Returns a tuple containing: | ||

| - | + | 1) The modified neural net, with trained weights | |

| - | + | ||

| + | 2) The number of iterations (that is, the number of weight updates) | ||

== Support Vector Machines == | == Support Vector Machines == | ||

Revision as of 02:21, 5 November 2015

Contents |

This lab is due by TODO at 10:00pm.

To work on this lab, you will need to get the code, much like you did for the first two labs.

- You can view the files at: http://web.mit.edu/6.034/www/labs/lab6/

- Download it as a ZIP file: http://web.mit.edu/6.034/www/labs/lab6/lab6.zip

- Or, on Athena, add 6.034 and copy it from /mit/6.034/www/labs/lab6/.

Your answers for this lab belong in the main file lab6.py.

Problems

Neural Nets

Helper functions

stairstep: Computes the output of the stairstep function using the given threshold

sigmoid: Computes the output of the sigmoid function using the given steepness and midpoint

accuracy: Computes accuracy using desired_output and actual_output. If the output is binary, the accuracy ranges from -0.5 to 0

Wiring a neural net

TODO (this section will probably be cut, or maybe changed to just specifying number of neurons per layer)

Forward propagation

Given a neural net and dictionary of input values, performs forward propagation with the given threshold function to compute binary output.

This function should not modify the input net and will return a tuple containing:

1) a dictionary mapping neurons to their immediate outputs

2) the final binary output (0 or 1)

Backward propagation

update_weights: Performs a single step of back propagation. Computes delta_B values and weight updates for entire neural net, then updates all weights. Uses the sigmoid function to compute output.

Returns a tuple containing:

1) the modified neural net, with updated weights

2) a dictionary mapping neurons to delta_B values

back_prop: Updates the weights until the accuracy surpasses minimum_accuracy. Uses the sigmoid function to compute output.

Returns a tuple containing:

1) The modified neural net, with trained weights

2) The number of iterations (that is, the number of weight updates)

Support Vector Machines

Vector Math

norm(v): Returns the length of the vector v. Note that v can either be a Point instance or a tuple/list of coordinates.

dot_product(u,v): Computes the dot product of two vectors u, v. Again, each vector can either be a Point instance or tuple/list of coordinates.

Equations

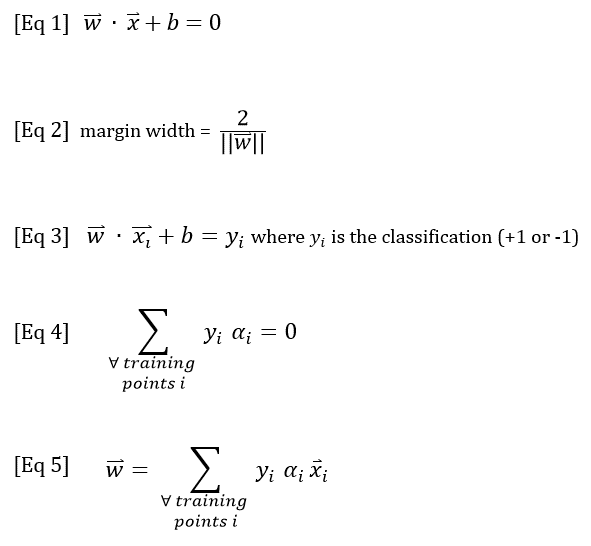

The following five equations may be helpful. Recall that Equations 1-3 will define the decision boundary and the margin width. Equations 4 & 5 will allow you to calculate the alpha values for the training points.

SVM Functions

positiveness: Evaluates Equation 1 for the given point

classify: Uses the given SVM to classify a Point. We assume that point's classification is unknown. Returns +1 or -1, or 0 if point is on boundary.

margin_width: Calculates the margin width based on current decision boundary.

[I'm changing the docstrings for check_gutter_constraint and check_alphas, so I'll add those later. -jmn) #TODO

Classification Accuracy

[misclassified_training_points -> jmn will add] #TODO

Survey

Please answer these questions at the bottom of your lab6.py file:

- NAME: What is your name? (string)

- COLLABORATORS: Other than 6.034 staff, whom did you work with on this lab? (string, or empty string if you worked alone)

- HOW_MANY_HOURS_THIS_LAB_TOOK: Approximately how many hours did you spend on this lab? (number or string)

- WHAT_I_FOUND_INTERESTING: Which parts of this lab, if any, did you find interesting? (string)

- WHAT_I_FOUND_BORING: Which parts of this lab, if any, did you find boring or tedious? (string)

- (optional) SUGGESTIONS: What specific changes would you recommend, if any, to improve this lab for future years? (string)

(We'd ask which parts you find confusing, but if you're confused you should really ask a TA.)

When you're done, run the online tester to submit your code.

API

Neural Nets

The file neural_net_api.py defines the Wire and NeuralNet classes, described below.

Wire

A Wire is a directed edge that can be used in a neural net to connect an input to a neuron, a neuron to a neuron, or a neuron to OUT.

NeuralNet

A neural net is represented as a directed graph whose edges are Wires and nodes can be neurons, inputs, or OUT.

Note that:

- Each variable input is represented by its name (a string).

- Each constant input is represented by an int or float (eg -1).

- Each neuron is represented by its name (a string).

- The final output is represented by the constant string NeuralNet.OUT.

Support Vector Machines

The file svm_api.py defines the Point, DecisionBoundary, and SupportVectorMachine classes, as well as some helper functions for vector math, all described below.

Point

A Point has a list or tuple of coordinates, and optionally a classification or an alpha value.

DecisionBoundary

A DecisionBoundary is defined by two parameters: a normal vector w, and an offset b. w is represented as a list or tuple of coordinates.

SupportVectorMachine

A SupportVectorMachine is a classifier that uses a DecisionBoundary to classify points. It has a list of training points and optionally a list of support vectors.

Helper functions for vector math

convert_point_to_coords: Given either a Point object or a tuple of coordinates, returns a tuple of coordinates.

vector_add: Given two vectors represented as lists or tuples of coordinates, returns their sum as a list of coordinates.

scalar_mult: Given a constant scalar and a vector (as a tuple or list of coordinates), returns a scaled list of coordinates.