Lab 5 2014

From 6.034 Wiki

Contents |

This is the last problem set in 6.034! It's due Friday, December 3rd at 11:59 PM.

commented out until problem set release FT2010 -kp

To work on this problem set, you will need to get the code:

- You can view it at: http://web.mit.edu/6.034/www/labs/lab5/

- Download it as a ZIP file: http://web.mit.edu/6.034/www/labs/lab5/lab5.zip

- Or, on Athena, add 6.034 and copy it from /mit/6.034/www/labs/lab5/.

- You will need to download and install an additional software package called Orange for the second part of the lab. Orange appears not to work well with Python 2.6, so please use python 2.5. Do this first so that you get the problems worked out early. If you have downloaded and installed it, you should be able to run orange_for_6034.py and get the Orange version number. Once you've filled in the boosting part, you should be able to run lab5.py, and see the output of several classifiers on the vampire dataset. If you get errors, email us.

- Note: Orange is available for Linux (Ubuntu), Windows, and OS X (and we have successfully tested this lab on these platforms). Also, if you are working on Mac OS X, be sure that you have Numpy installed.

- To check that your Orange is properly installed, run: python orange_for_6034.py and you should get a version string and no errors.

- NOTE: Make sure you are running Python 2.5! There have been reported issues with Python 2.6 and Orange.

To work on this lab on Athena you'll need to:

- If you use bash: Setup your environment to pickup the class installation of Orange by adding the following:

export LD_LIBRARY_PATH=/afs/athena.mit.edu/course/6/6.034/lib/python2.5/dist-packages/orange:$LD_LIBRARY_PATH

to your .bashrc.mine file. If you are using tcsh or csh run:setenv LD_LIBRARY_PATH /afs/athena.mit.edu/course/6/6.034/lib/python2.5/dist-packages/orange:$LD_LIBRARY_PATH

instead. You may omit :$LD_LIBRARY_PATH if you get complaints about it not being set. The above settings tell python where to look for shared libraries required for running Orange. NOTE: If you don't know what the shell you are running, type "echo $SHELL". - Log into linux.mit.edu: ssh -X <user>@linux.mit.edu

- For your convenience, we've provided a script, run-orange-gui.sh that will run the Orange GUI on linux.mit.edu. Note that some Orange Widgets (like ROC charting) may not appear in GUI in the Athena version. Don't worry, you won't need the GUI to answer the questions for this lab.

Your answers for the problem set belong in the main file lab5.py, as well as neural_net.py and boost.py.

Neural Nets

In this part of Lab 5, you are to complete the API for a Neural Net. Then afterwards, you are to construct neural nets to solve various problems.

We have provided you a skeleton of the Neural Net in neural_net.py, you are to fill in the unimplemented methods.

Neural nets update weights based on the partial derivative of the performance function with respect to that weight. The formula we have used in class is as follows:

weight[i] = weight[i] + alpha * dP/dweight[i]

where alpha is the learning rate.

About the Classes

ValuedElement: This is an element in our net that has a value and a name. The only ValuedElements are Inputs (e.g. i1, i2) and Weights (e.g. w1A, wAB)

- set_value(self,val) set the value of the element

- get_value(self) get the value of the element

- get_name(self) get the name of the element

DifferentiableElement: These are elements that have outputs and partial derivatives.

- output(self): returns the output of this element

- dOutdX(self,elem): returns the partial derivative with respect to another element

The only DifferentiableElements are Inputs, Neurons, and the PerformanceElem. You will have to write both of these methods for each class. Recall the formulas that we've used in class.

Weight(ValuedElement): Inherits all the ValuedElement methods and also has

- set_next_value(self,val): which sets the next value

- update(self): which sets the value to whatever was stored in next_value

These methods are used for the training algorithm itself and you will probably not need to use them

Neurons(DifferentiableElement): We implemented some caching, so instead of actually writing output and dOutdx, you must instead implement:

- compute_output(self): and

- compute_doutdx(self,elem):

Be sure to use the sigmoid and ds/dz functions as discussed in class

s(z) = 1.0 / (1.0 + e**(-z)) ds(z)/dz = s(z) * (1 - s(z))

PerformanceElem(DifferentiableElement): additionally has

- set_desired which sets the desired_output. Used for training and you will probably not need it.

Be sure to use the performance function and its derivative as discussed in class

P(x) = -0.5 * (d - x) ** 2 dP(x)/dx = (d - x)

Generalize those equations to behave more generally, like:

dP(x)/dz = (d - x) * (dx/dz)

Building Neural Nets

Once you have finished implementing the Neural Net API, you can start building neural nets. For instance here is an example basic neural network:

def make_neural_net_basic():

"""Returns a 1 neuron network with 2 variable inputs, and 1 fixed input."""

i0 = Input('i0',-1.0) # this input is immutable

i1 = Input('i1',0.0)

i2 = Input('i2',0,0)

w1A = Weight('w1A',1)

w2A = Weight('w2A',1)

wA = Weight('wA', 1)

# the inputs must be in the same order as their associated weights

A = Neuron('A', [i1,i2,i0], [w1A,w2A,wA])

P = PerformanceElem(A, 0.0)

# Package all the components into a network

# First list the PerformanceElem P

# Then list all neurons afterwards

net = Network(P,[A])

return net

Naming conventions

IMPORTANT: Be sure to use the following naming convention for the elements in your networks:

Inputs:

- Format: 'i' + input_number

- Conventions:

- Start numbering from 1.

- Use the same i0 for all the fixed -1 inputs

- Examples: 'i1', i2.

Weights:

- Format 'w' + from_identifier + '.' + to_identifier

- Examples:

- w1.A for weight from Input i1 to Neuron A

- wB.C for weight from Neuron B to Neuron C.

Neurons:

- Format: alphabet_letter

- Convention: Assign neuron names in order of distance to the inputs.

- Example: A is the neuron closest to the inputs, and on the left-most (or top-most) side of the net.

- For ties, order neurons from left to right or top to bottom (depending on how you draw orient your network).

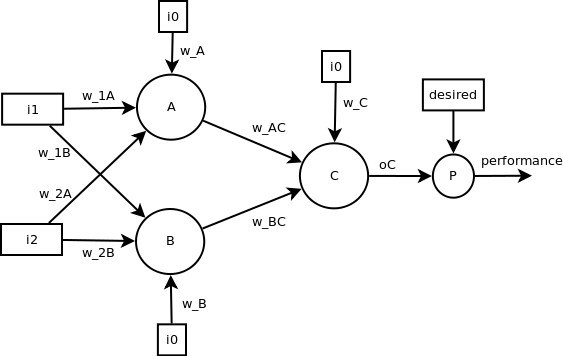

2-layer Neural Net

Use the Neural Net API you've just completed to create a network that looks like the following:

Testing

We have provided a file neural_net_tester.py to help you unit test your neural net implementation, and help you solve subsequent problems.

Datasets

Basic Functions are defined in neural_net_data.py These test the simple network you've implemented. Linearly separable functions such as AND, OR.

Now you can run harder cases, EQUAL, NOT-EQUAL (XOR).

Construct a Neural Net

You are to construct a neural net that can combine two or more boundary regions. Examples of such cases include "the letter-l" and "moat". To pass tests your network must be able to classify these two abstract problems with 100% accuracy.

Final Challenge

While neural nets can learn almost anything. You will find that nets can get stuck in local maximums and get stuck. Try to learn the "patchy" problem. You might get lucky and find a solution right away, but you might also get stuck indefinitely.

You learned in this class, that neural nets at it's optimal is nothing but a bunch of hyperplane dividers, that can be combined with higher level boolean logic. To solve the patchy problem. Use the network you constructed for the last problem make_neural_net_challenging, and provide initial weights. With correct initial weights, your net should be able to test perfectly on the test data.

Boosting

You're still trying to use AI to predict the votes of politicians. ID-Trees were great, but you've heard about these other magnificent learning algorithms like SVMs and Boosting. Boosting sounds easier to implement and had a pretty good reputation, so you decide to start with that.

To make sure that you interpret the results without letting your political preconceptions get in the way, you dig up some old data to work with: in particular, the data from the 4th House of Representatives, which met from 1796 to 1797. (According to the records on voteview.com, this is when the two-party system first emerged, with the two parties being designated "Federalists" and "Republicans".)

You experiment with that data before going on to the 2007-2008 data, finding that Congressmen in 1796 were much more clear about what they were voting on than in 2008.

The framework for a boosting classifier can be found in boost.py. You need to finish coding it, and then use it to learn some classifiers and answer a few questions.

The following resources will be helpful:

- The documentation for the boosting code, which you can find embedded in boost.py in the documentation strings.

- The Shapire paper on boosting, or the notes that summarizes it.

- The Lab 4 writeup, if you need to refer to how data_reader.py represents legislators and votes.

A (clever|cheap) trick

The boosting code uses a trick that means it only has to try half the number of base classifiers.

It turns out that AdaBoost does not really care which side of its base classifier is +1 and which side is -1. If you choose a classifier that is the opposite of the best classifier -- it returns -1 for most points that should be +1, and returns +1 for most points that should be -1, and therefore has a high error rate -- it works the same as if you had chosen the negation of that classifier, which is the best classifier.

If the data reweighting step is implemented correctly, it will produce the same weights given a classifier or its opposite. Also, a classifier with a high error rate will end up with a negative alpha value, so that in the final "vote" of classifiers it will act like its opposite. So the important thing about a classifier isn't that its error rate is low -- it's that the error rate is far from 1/2.

In the boosting code, we take advantage of this. We include only classifiers that output +1 for voting YES on a particular motion, or +1 for voting NO on a particular motion, and as the "best classifier" we choose the classifier whose error rate is farthest from 1/2. If the error rate is high, then the result will act like a classifier that outputs +1 for "voting NO or abstaining", or +1 for "voting YES or abstaining", respectively. This means we don't have to include these classifiers in the base classifier list, speeding up the algorithm by a factor of 2.

Completing the code

Here are the parts that you need to complete:

- In the BoostClassifier class in boost.py, the update_weights method is undefined. You need to define this method so that it changes the data weights in the way prescribed by the AdaBoost algorithm. There are two ways of implementing this update which happen to be mathematically equivalent.

- In the BoostClassifier class, the classify method is also undefined. Define it so that you can use a trained BoostClassifier as a classifier, outputting +1 or -1 based on the weighted results of its base classifiers. Complete the very similar orange_classify method as well.

- In lab5.py, the most_misclassified function is undefined. You will need to define it to answer the questions.

Questions

Answer the two questions republican_newspaper_vote and republican_sunset_vote in lab5.py.

When you are asked how a particular political party would vote on a particular motion, disregard the possibility of abstaining. If your classifier results indicate that the party wouldn't vote NO, consider that an indication that the party would vote YES.

Orange you glad someone else implemented these?

First things first: Have you installed Orange yet?

Now that you've installed Orange, when you run lab5.py, does it complain about Orange, or does it show you the outputs of some classifiers on vampire data?

Getting familiar with Orange

This part is optional: it's about using the Orange GUI to do a little machine learning without doing any programming. We've given you some data files (vampires.tab, H004.tab, adult.tab, titanic.tab, breast-cancer.tab, etc.) that you can play with. Try making something like this screenshot, and look at the performance, and look at the actual predictions.

Using Orange from Python

We have given you a function called describe_and_classify that trains a bunch of classifiers that Orange provides. Some of them will be more familiar than others.

First it trains each classifier on the data, shows its output on each data point from the training data, and shows the accuracy on the training data. You know from class that the accuracy on the training data should be 1 for these classifiers. It is less than one because each classifier comes with built-in regularization to help prevent overtraining. That's what the pruning is for the decision tree, for example. We didn't specify regularization parameters to most of the learners because they have fine defaults. You can read more about them in the Orange documentation.

You'll notice that we do one extra thing with the decision tree. We print it out. For most classifiers, their internal representations are hard for humans to read, but the decision tree's internal representation can be very useful in getting to know your data.

Then describe_and_classify passes the untrained learners, without having seen the data, to cross-validation. Cross-validation hides part of the training data, trains the learner on the rest, and then tests it on the hidden part. To avoid accidentally picking just one terrible subset of the data to hide (an irreproducible subset), it divides the data evenly into some number of folds, successively tests by hiding each fold in turn, and averages over the results. You will find with cross-validation that the classifiers do much better on identifying political parties than they do on vampires. That's because the vampire dataset has so few examples, with so little redundancy, that if you take away one example, it is very likely to remove the information you actually need in order to classify that example.

To ensure that you've gotten the right idea from each part of describe_and_classify, there are six questions just below the function.

Boosting with Orange

You may be able to do better by using AdaBoost over your Orange classifiers than you could do with any of those Orange classifiers by themselves. Then again, you may do worse. That AdaBoost "doesn't tend to overfit" is a somewhat conditional statement that depends a lot on the particular classifiers that boosting gets its pick of. If some of the underlying classifiers tend to overfit, then boosting will greedily choose them first, and it will be stuck with those choices for testing.

We have set up a learner that uses the BoostClassifier from the first part, but its underlying classifiers are the Orange classifiers we were just looking at. When you combine classifiers in this way, you create what's called an "ensemble classifier". You will notice, as you run this new classifier on the various data sets we've provided, that the ensemble frequently performs worse in cross-validation than some (or most) of its underlying classifiers.

Your job is to find a set of classifiers for the ensemble that get at least 74% accuracy on the breast-cancer dataset. You may use any subset of the classifiers we've provided. Put the short names of your classifiers into the list classifiers_for_best_ensemble. There will be honorable mention in class for the best ensemble. Classifier performance appears to be architecture dependent, so you might be able to get to 74% with just one classifier on your machine, but that won't be enough on the server -- in this case, try to get even better performance at home. If you are proud of the way that you went about choosing the best ensemble, let us know to look at your code carefully, and there may be another honorable mention for that.

Bonus!

We think there may be a problem with the code somewhere, but we don't know quite where. If you run the ensemble boosting with the original set of learners (one of each) then you get terrible results for the H109 data set. Can you figure out why?

Errata

It turns out that different architectures yield different results from describe_and_classify on the vampire and H004 datasets. Some folks have perfectly reasonable answers to the short answer part given the output on their machine, but do not pass the tests with those answers. To try and standardize, we've put up the output from the two runs from which we generated the answers.

For the same reason, some folks are getting 74% on their home computers very easily. The question was intended to set a lower limit which was larger than any of the classifiers by itself. If you're getting 74, but the ensemble classifier test is failing, you'll need to try harder. To make that a bit easier, we've made it possible for you to check your proposed ensemble on the machine we're actually testing on. Here is an example query with all of the classifiers in learner except the decision tree. You'll want to use some subset of the classifiers. Think about why the decision tree isn't a good choice as a base classifier for boosting.

Orange appears not to work well with Python 2.6, so please use Python 2.5.

commented out until problem set release ft2010 -kp -->