Lab 6: KNNs & ID Trees

From 6.034 Wiki

Contents |

This lab is due by Friday, October 21 at 10:00pm.

(todo put code online) Your answers for this lab belong in the main file lab5.py. This lab will cover identification trees as well as k-nearest neighbors.

Identification Trees

In this section of the lab, we will first warm up by using an ID tree to classify points. Then, we will learn how to construct new ID trees from raw data, using the techniques we learned in class.

Note: We strongly recommend reading the API section before writing any code. Otherwise, you may find yourself re-writing code that needn't be written again.

Using an ID Tree to classify unknown points

In this lab, we will represent an ID tree recursively as a tree of IdentificationTreeNode objects. For more information, see the API below.

For the ID trees section, we will represent a data point as a dictionary mapping attributes to values. For example:

datapoint1 = {"name":"person1", "vampire":"Yes", "Shadow":"?", "Garlic":"No", "Complexion":"Ruddy", "Accent":"Odd"}

To begin, let's use an ID tree to classify a point! Classifying a point using an ID tree is straight-forward. We recursively apply the current node's classifier to the data point, using the result to choose which branch to take to the child node. If a "leaf" node is ever encountered, that node gives the final classification of the point.

Write a function id_tree_classify_point that takes in a point (represented as a dictionary) and an ID tree (represented as a IdentificationTreeNode) and uses the ID tree to classify the point (even if the point already has a classification defined), returning the final classification:

def id_tree_classify_point(point, id_tree):

This function can be written cleanly in 3-4 lines, either iteratively or recursively. If you're not sure how, check out the available methods in the IdentificationTreeNode API. In particular, the methods .apply_classifier(point) and .get_node_classification() may be useful.

Calculating Disorder

Now that we're able to use an ID tree to classify points, it's time to actually construct an ID tree yourself! We'll start by coding the information-disorder equations to calculate the disorder of a branch or test. These equations are explained in more detail on page 429 of the reading.

(todo provide equations here & explain disorder)

Before we begin coding, here's a quick description of the nomenclature we use:

- A branch is a Python list of classifications representing the points that, upon applying a particular classifier (e.g. "Eats garlic") to a set of many points, all have the same feature result. For example, the branch ["Vampire", "Vampire", "Vampire", "Not Vampire", "Not Vampire"] could refer to the five data points that answered "No" when asked if they ate garlic: the first three all happen to be vampires, but the last two are not.

- A stump (or decision stump) is the list of all branches for a particular classifier. For example, the stump [["Vampire", "Vampire", "Vampire", "Not Vampire", "Not Vampire"], ["Not vampire", "Not vampire", "Not vampire"]] could refer to the results from applying the "Eats garlic" classifier to all of the data points; note that the first element of this stump is the branch for "No" and the second element of this stump is the branch for "Yes".

- A test (occasionally we overload the term classifier here as well) can be thought of as the conceptual manifestation of a decision stump. They basically refer to the same thing.

Now that that's out of the way, it's time to code the branch_disorder function. branch_disorder should take in a branch of a decision stump, represented as a list of classifications (e.g. ["Oak", "Oak", "Maple"]), and return the disorder of the branch, as a number:

def branch_disorder(branch):

For example, calling branch_disorder(["Oak", "Oak", "Maple"]) should yield approximately 0.918.

The following Python tricks and shortcuts may be useful here and/or later in the lab:

- log2: We've defined a function log2 that takes in a single number and returns log2 of the number.

- INF: As in previous labs, we've defined the constant INF, which is a float representing infinity

- list.count: .count is a built-in list method that counts how many times an item occurs in a list. For example, [10, 20, 20, 30].count(20) -> 2.

- float: Recall that if you divide two ints in Python2, it rounds down to the nearest int, so you'll need to cast one of them to a float if you want to perform normal division.

- set: Recall from Lab 0 that a set is handy way to count or enumerate the unique items in a list.

Next, you will use your branch_disorder function to help compute the disorder of an entire test (a decision stump). average_test_disorder should take in a decision stump represented as a list of branches (e.g. [["Oak", "Oak", "Maple"], ["Maple", "Maple"]]), and return the disorder of the entire stump (the weighted sum of branch disorders), as a number:

def average_test_disorder(stump):

For example, calling average_test_disorder([["Oak", "Oak", "Maple"], ["Maple", "Maple"]]) should yield approximately 0.551 since the the first branch has weight 3/5 and a disorder of about 0.915, and the second branch has a weight of 2/5 with a disorder of 0.

Constructing an ID Tree

In order to actually construct an ID tree, we'll need to work directly with classifiers, also known as tests. Recall that at each node of an ID tree, unless the training data at this node is homogeneous, we pick the best available classifier to split the data up. This same logic is repeated at every level of the tree, with each node taking as training data the data points sorted into that category by the node above it.

We will represent each classifier as a Classifier object, described in the API below.

Using the disorder functions you defined above, implement a function to select the best classifier. The function should take in some data (as a list of point dictionaries), a list of classifiers (Classifier objects), and the attribute (a string) you want your ID tree to ultimately be classifying by (e.g. "Vampire?"). It should return the classifier that has the lowest disorder.

Edge cases:

- If multiple classifiers are tied for lowest disorder, break ties by preferring the classifier that occurs earlier in the list.

- If the classifier with lowest disorder is no good (that is, it doesn't separate the data at all), raise the exception NoGoodClassifiersError instead of returning a classifier.

def find_best_classifier(data, possible_classifiers, classification_key):

Next, we will start building the ID tree. Use find_best_classifier to implement the next function, add_best_classifier_to_tree, which should take in an incomplete tree (as an IdentificationTreeNode object) and a list of possible classifiers. The function should perform one of three actions, depending on the node/data:

- If the node is homogeneous ((todo move this to API) you can use the function is_homogeneous(data, classification_key) to check this), then it should be a leaf node, so add the classification to the node.

- If the node is not homogeneous and the data can be divided further, add the best classifier to the node.

- If the node is not homogeneous but there are no good classifiers left, raise a NoGoodClassifiersError.

The function should either modify and return the original IdentificationTreeNode, or raise an exception:

def add_best_classifier_to_tree(id_tree_node, possible_classifiers):

Now, you can implement the function finish_greedy_subtree, which takes in an incomplete tree (represented as an IdentificationTreeNode) and a list of possible classifiers, and adds classifiers to the tree until either perfect classification has been achieved, or there are no good classifiers left. If a leaf node cannot become homogeneous, leave its classification unassigned (which defaults to None). We recommend implementing this function recursively.

def finish_greedy_subtree(id_tree_node, possible_classifiers):

Congrats, you're done constructing ID trees! To put everything together, we have provided a function that calls your functions to construct a complete ID tree:

def construct_greedy_id_tree(data, possible_classifiers, classification_key):

id_tree = IdentificationTreeNode(data, classification_key)

return finish_greedy_subtree(id_tree, possible_classifiers)

We've also provided some datasets from past quizzes, so you can now use your ID tree builder to solve problems! For example, if you run

print construct_greedy_id_tree(tree_data, tree_classifiers, "tree_type")

...it should compute and print the solution to the tree-identification problem from 2014 Q2.

You can also try:

print construct_greedy_id_tree(angel_data, angel_classifiers, "Classification") #from 2012 Q2 print construct_greedy_id_tree(numeric_data, numeric_classifiers, "class") #from 2013 Q2

You can also change the classification_key attribute to, for example, use tree_type to predict what type of bark_texture a tree has:

print construct_greedy_id_tree(tree_data, tree_classifiers_reverse, "bark_texture") #build an ID tree to predict bark_texture

Multiple Choice

Questions 1-4: Identification (of) Trees

These questions refer to the data from 2014 Quiz 2, Problem 1, Part A, which is stored in the variables tree_data (the data) and tree_classifiers (a list of Classifiers).

Question 1: Which of the four classifiers (a.k.a. feature tests) has the lowest disorder? Fill in ANSWER_1 with one of the four classifiers as a string: 'has_leaves', 'leaf_shape', 'orange_foliage', or 'tree_type'.

Question 2: Which of the four classifiers has the second lowest disorder? Fill in ANSWER_2 with one of the four classifiers as a string.

Question 3: If we start constructing the greedy, disorder-minimizing ID tree, we'll start with the classifier from Question 1. Our one-classifier tree has exactly one non-homogeneous branch. For that branch, which of the four classifiers has the lowest disorder? Fill in ANSWER_3 with one of the four classifiers as a string.

Question 4: Is the test with the second lowest disorder in the first round always the same as the test with the lowest disorder in the second round? Answer 'Yes' or 'No' in ANSWER_4.

Questions 5-9: XOR

Each training point in the dataset below has three binary features (A, B, C) and a binary classification (0 or 1). Note that the classification happens to be the boolean function XOR(A,B), while feature C is just random noise. The dataset is available in the lab as binary_data, and the three classifiers are stored in the variable binary_classifiers.

| Classification | A | B | C |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 1 |

| 1 | 0 | 1 | 0 |

| 1 | 0 | 1 | 1 |

| 1 | 1 | 0 | 1 |

| 1 | 1 | 0 | 1 |

| 0 | 1 | 1 | 0 |

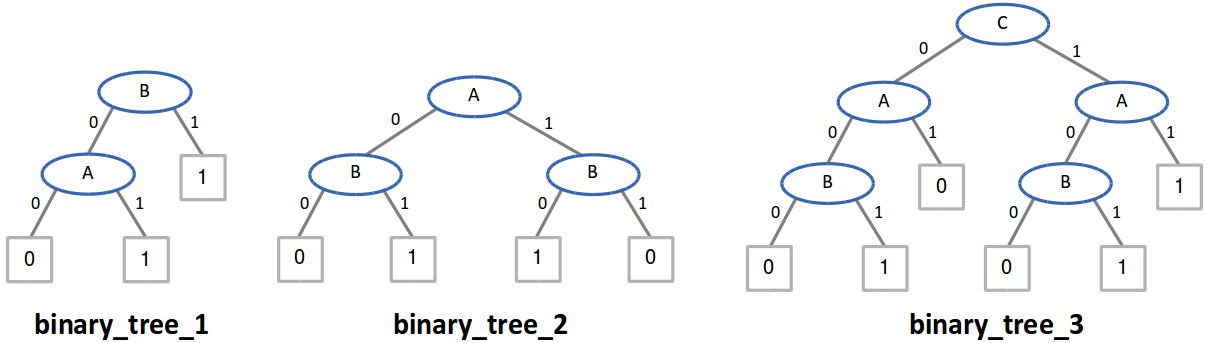

Consider the three identification trees below. They are defined in the lab code as binary_tree_n, for n from 1 to 3. To answer the questions below, you may use your code or work out the answers by hand.

Question 5: Which of the trees correctly classify all of the training data? Fill in ANSWER_5 with a list of numbers (e.g. [1,2] if trees 1 and 2 correctly classify the training data but tree 3 does not).

Question 6: Which of the trees could be created by the greedy, disorder-minimizing ID tree algorithm? Fill in ANSWER_6 with a list of numbers.

Question 7: Which trees correctly compute the function XOR(A,B)? Fill in ANSWER_7 with a list of numbers.

Question 8: Based on Occam's Razor, which tree (among the ones that correctly classify the data) is likely to give the most accurate results when classifying unknown test points? Fill in ANSWER_8 with a single number, as an int.

Question 9: Will the greedy, disorder-minimizing tree always be the simplest possible tree to correctly classify the data? Answer 'Yes' or 'No' in ANSWER_9.

k-Nearest Neighbors

Multiple Choice: Drawing boundaries

todo: something about drawing 1NN boundaries & comparison to ID tree boundaries

Python warm-up: Distance metrics

We can use many different distance metrics with k-nearest neighbors. In 6.034, we cover four such metrics:

- Euclidean distance: the straight-line distance between two points. (todo add formula)

- Manhattan distance: the distance between two points assuming you can only move parallel to axes. In two dimensions, you can conceptualize this as only being able to move along the "grid lines." (todo add formula)

- Hamming distance: the number of differing corresponding components between two vectors. (todo add formula)

- Cosine distance: todo explain (todo add formula) (todo note about cosine_distance being negated, b/c it's a similarity metric where 1 = most similar, 0 = not similar) (todo let's use 1-cos)

It's also possible to transform data instead of using a different metric -- for instance, transforming to polar coordinates -- but in this lab we will only work with distance metrics in Cartesian coordinates.

We'll start with some basic functions for manipulating vectors, which may be useful for cosine_distance. For these, we will represent an n-dimensional vector as a list or tuple of n coordinates. dot_product should compute the dot product of two vectors, while norm computes the length of a vector. (There is a simple implementation of norm that uses dot_product.) Implement both functions:

def dot_product(u, v):

def norm(v):

Next, you'll implement each of the four distance metrics. For the rest of the k-nearest neighbors portion of this lab, we will represent data points as Point objects (described below in the API). Each distance function should take in two Points and return the distance between them as a float or int. Implement each distance function:

def euclidean_distance(point1, point2):

def manhattan_distance(point1, point2):

def hamming_distance(point1, point2):

def cosine_distance(point1, point2):

Classifying Points with k-Nearest Neighbors

We've provided a function: (todo explain)

def get_k_closest_points(point, data, k, distance_metric):

"return list of k points closest to input point, based on metric"

if k >= len(data):

return data

sorted_points = sorted(data, key=lambda p2: (distance_metric(point, p2), p2.coords)) #break ties deterministically by preferring points with smaller coordinates

return sorted_points[:k]

Your task is to use that to write a function that classifies a point, given a set of data (as a list of Points), a value of k, and a distance metric (a function):

def knn_classify_point(point, data, k, distance_metric):

todo hint: to get the mode of a list, do: max(set(my_list), key=my_list.count)

Cross-validation: Choosing the best k and distance metric

todo explain functions

todo clarify different definitions of cross-validation; we're using leave-one-out

def cross_validate(data, k, distance_metric):

def find_best_k_and_metric(data):

More multiple choice

todo something about overfitting, underfitting, running classify and find_best_k... on some data to see what happens

Survey

Please answer these questions at the bottom of your lab5.py file:

- NAME: What is your name? (string)

- COLLABORATORS: Other than 6.034 staff, with whom did you work on this lab? (string, or empty string if you worked alone)

- HOW_MANY_HOURS_THIS_LAB_TOOK: Approximately how many hours did you spend on this lab? (number or string)

- WHAT_I_FOUND_INTERESTING: Which parts of this lab, if any, did you find interesting? (string)

- WHAT_I_FOUND_BORING: Which parts of this lab, if any, did you find boring or tedious? (string)

- (optional) SUGGESTIONS: What specific changes would you recommend, if any, to improve this lab for future years? (string)

(We'd ask which parts you find confusing, but if you're confused you should really ask a TA.)

When you're done, run the online tester to submit your code.

API Reference Documentation

The file api.py defines the Classifier, IdentificationTreeNode, and Point classes, as well as some helper functions for ID trees, all described below. (todo: describe helper functions -- jake: I added the section and half-wrote it below)

Classifier

Classifier objects are used for constructing and manipulating ID trees.

A Classifier has the following attributes:

- classifier.name, the name of the classifier.

- classifier.classify, a function that takes in a point and returns a value.

In our ID trees, a point is represented as a dict mapping attribute names to their values.

IdentificationTreeNode

In this lab, an ID tree is represented recursively as a tree of IdentificationTreeNode objects. In particular, an IdentificationTreeNode object fully represents an entire ID tree rooted at that node.

For example, suppose we have an IdentificationTreeNode called id_tree_node Then, id_tree_node's children are themselves IdentificationTreeNode objects, each fully describing the sub-trees of id_tree_node. However, if id_tree_node has no children, then id_tree_node is a leaf, meaning that it represents a homogeneous (by classification) set of data points. Furthermore, any datum at this node is definitively classified by that leaf's classification.

As such, in a completed ID tree, each node is either

- a leaf node with a classification such as "Yes" (is a vampire) or "No" (is not a vampire) in the vampires example; or

- a non-leaf node with a classifier (such as "Accent" in the vampires example), with branches (one per classifier result, e.g. "Heavy", "Odd", and "None") leading to child nodes.

An IdentificationTreeNode has the following attributes and methods:

- id_tree_node.data, the list of training points at this node in the tree.

- id_tree_node.classification_key, the single attribute by which the tree classifies points (e.g. "Vampire?" for the vampires example, or "Classification" for the angel data).

- id_tree_node.get_parent_branch_name(): returns the name of the branch leading to this node, or None if this is a root node.

- id_tree_node.is_leaf(): returns True if the node is a leaf (has a classification), otherwise False.

- id_tree_node.set_classifier(classifier): Uses the specified Classifier object to add branches below the current node. Modifies and returns the original id_tree_node. May print warnings if the specified classifier is inadvisable.

- id_tree_node.apply_classifier(point): Applies this node's classifier to a given data point by following the appropriate branch of the tree, then returns the child node. If this node is a leaf node (and thus doesn't have a classifier), raises a ClassifierError.

- id_tree_node.get_classifier(): returns the classifier associated with this node, if it has one. Otherwise, returns None.

- id_tree_node.set_node_classification(classification): Sets this node's classification, thus defining this node as a leaf node. Modifies and returns the original id_tree_node. May print warnings if the node already has branches defined.

- id_tree_node.get_node_classification(): returns this node's classification, if it has one. Otherwise, returns None.

- id_tree_node.get_branches(): returns a dictionary mapping this node's child branches to the nodes at the end of them.

- id_tree_node.describe_type(): returns a human-readable string describing the node type (e.g. leaf, subtree, etc.).

Helper Functions for ID Trees

- split_on_classifier(classifier, data): given a particular classifier and a list of data, returns a dictionary mapping each possible feature to a list of the data points in that branch .

- extract_classifications(branches_or_data, classification_key): given either a list of data points, or a dictionary mapping features to lists of data point, converts the input into the format of a decision stump ..... (todo wait what? Do we even need to allow this to take in just 'a list of data points'? Surely the students can do that themselves if they need to)

- is_homogeneous(data, classification_key): given a list of data points and a classification key, returns True if that data is homogeneous with respect to classifier keyed on classifcation_key.

Point (for kNN)

Point objects are used in the k-nearest neighbors section only.

A Point has the following attributes:

- point.name, the name of the point (a string), if defined.

- point.coords, the coordinates of the point, represented as a vector (a tuple or list of numbers).

- point.classification, the classification of the point, if known.

Appendix

How to handle exceptions

(using NoGoodClassifiersError as an example)

| Python command | Effect |

|---|---|

| raise NoGoodClassifiersError("message") | Stops execution by raising, or throwing, a NoGoodClassifiersError with the specified error message |

| try: #code here | Defines the beginning of a block of code that might raise an exception |

| except NoGoodClassifiersError: #code here | Follows a try block. Defines what to do if a NoGoodClassifiersError was raised in the try block. |