Lab 8: Support Vector Machines

From 6.034 Wiki

(→SupportVectorMachine) |

(→Train a support vector machine) |

||

| Line 88: | Line 88: | ||

| - | === | + | === Training a support vector machine === |

| - | + | So far, we have seen how to calculate the final parameters of an SVM (given the decision boundary), and we've used the equations to assess how well an SVM has been trained, but we haven't actually attempted to train an SVM. | |

| - | + | In practice, training an SVM is a hill-climbing problem in alpha-space using the Lagrangian. There's a bit of math involved, and the equations are typically solved using Sequential Minimal Optimization (SMO), a type of quadratic programming (which is similar to linear programming, but more complicated). | |

| + | ...but if that sounds scary, don't worry -- we've provided some code to do most of it for you! | ||

| + | |||

| + | ==== What <tt>train_svm</tt> does ==== | ||

| + | In train_svm.py, we've provided some SVM-solving code, originally written by past 6.034 student Crystal Pan. Here is approximately what the function <tt>train_svm</tt> (in train_svm.py) does: | ||

| + | # [todo] | ||

| + | |||

| + | <!--==== What <tt>display_svm</tt> does ====--> | ||

| + | We've also provided visualization code in display_svm.py, originally written by past 6.034 student Kelly Shen. This code is automatically called by <tt>train_svm</tt>, although you can adjust the parameters or disable it by calling <tt>train_svm</tt> with [[#Parameters for train|additional parameters]]. | ||

| + | |||

| + | |||

| + | Note that the visualization requires the Python module Matplotlib. If you don't have Matplotlib installed, you can: | ||

| + | * install Matplotlib (just Google it) | ||

| + | * install a stand-alone Python distribution that comes with Matplotlib and won't interfere with your current Python installation (e.g. Anaconda or Python(x,y)) | ||

| + | * work with a friend, running the code on their computer | ||

| + | * use an Athena cluster computer (the Athena version of Python 2 should include Matplotlib) | ||

| + | * use Athena locally via ssh -X (which enables Athena to display GUI windows, including colored plots, on your screen): | ||

| + | ssh -X username@athena.dialup.mit.edu | ||

| + | * comment out the line "<tt>from display_svm import create_svm_graph</tt>" in train_svm.py and run <tt>train_svm</tt> with <tt>show_graph=False</tt> (but then you won't get to watch the SVM being trained!) | ||

| + | |||

| + | ==== Your coding task: Update SVM from alpha values ==== | ||

| + | Your task is to take the alpha values determined by SMO and use them to determine the normal vector w, the offset b, and the support vectors. Implement the function <tt>update_svm_from_alphas</tt>, which takes in a SupportVectorMachine and a kernel function (such as <tt>dot_product</tt>), then uses the SVM's training points and alpha values to update its <tt>w</tt>, <tt>b</tt>, and <tt>support_vectors</tt>. Return the updated SVM. (If the input SVM already has <tt>w</tt>, <tt>b</tt>, and/or <tt>support_vectors</tt> defined, ignore them and overwrite them. For this function, you may assume that the SVM is 2-dimensional.) | ||

| + | |||

| + | def update_svm_from_alphas(svm, kernel_fn=dot_product): | ||

| + | |||

| + | |||

| + | Once you've implemented that, you should be able to train an SVM on a dataset and visualize the results! | ||

| + | |||

| + | ==== Parameters for <tt>train_svm</tt> ==== | ||

| + | The function <tt>train_svm</tt> has only one '''required''' arguments: | ||

| + | * <tt><b>training_points</b></tt>: A list of training points as <tt>Point</tt> objects. | ||

| + | |||

| + | It also has many optional arguments (with defaults given): | ||

| + | * [todo] | ||

| + | |||

| + | ==== One more task: Multiple-choice questions about SVM training (based on <tt>train_svm</tt>) ==== | ||

| + | [todo] | ||

| + | |||

| + | ==== If you want to do more... ==== | ||

If you want to learn more about training SVMs, the following resources (mostly beyond the scope of 6.034) may be helpful: | If you want to learn more about training SVMs, the following resources (mostly beyond the scope of 6.034) may be helpful: | ||

* [http://cs.nyu.edu/~dsontag/courses/ml12/slides/lecture5.pdf SVM lecture notes] from a machine-learning class at NYU | * [http://cs.nyu.edu/~dsontag/courses/ml12/slides/lecture5.pdf SVM lecture notes] from a machine-learning class at NYU | ||

| Line 99: | Line 137: | ||

* [http://courses.csail.mit.edu/6.034f/ai3/SVM.pdf SVM slides], from the [[Reference material and playlist]] | * [http://courses.csail.mit.edu/6.034f/ai3/SVM.pdf SVM slides], from the [[Reference material and playlist]] | ||

* Wikipedia articles on [https://en.wikipedia.org/wiki/Support_vector_machine#Linear_SVM SMV math] and [https://en.wikipedia.org/wiki/Sequential_minimal_optimization Sequential Minimal Optimization] | * Wikipedia articles on [https://en.wikipedia.org/wiki/Support_vector_machine#Linear_SVM SMV math] and [https://en.wikipedia.org/wiki/Sequential_minimal_optimization Sequential Minimal Optimization] | ||

| + | |||

| + | Other possible extensions of this lab include: | ||

| + | * Define some non-linear kernel functions and try training with them. | ||

| + | * Extend the visualization code to display non-linear kernels. | ||

| + | * [todo] | ||

| + | |||

| + | If you do something cool, we'd love to see it! Feel free to send your code and/or results to 6.034-2016-staff@mit.edu (ideally with some sort of documentation). Your code could even end up in a future version of this lab! (With your permission, of course.) | ||

== Survey == | == Survey == | ||

Revision as of 20:17, 28 October 2016

Contents |

This lab is due by Friday, November 4 at 10:00pm.

To work on this lab, you will need to get the code, much like you did for the previous labs. You can:

- Use Git on your computer: git clone username@athena.dialup.mit.edu:/mit/6.034/www/labs/lab7

- Use Git on Athena: git clone /mit/6.034/www/labs/lab7

- Download it as a ZIP file: http://web.mit.edu/6.034/www/labs/lab7/lab7.zip

- View the files individually: http://web.mit.edu/6.034/www/labs/lab7/

Your answers for this lab belong in the main file lab7.py.

Problems: Support Vector Machines

This lab is divided into two independent parts. In the first part, you'll code subroutines necessary for training and using neural networks. In the second part, you'll code subroutines for using and validating support vector machines.

SVM Equations and Properties

Vector Math

We'll start with some basic functions for manipulating vectors. For now, we will represent an n-dimensional vector as a list or tuple of n coordinates. dot_product should compute the dot product of two vectors, while norm computes the length of a vector. (There is a simple implementation of norm that uses dot_product.) Implement both functions.

(If these look familiar, it's probably because you saw them on Lab 5. Feel free to copy your implementations from your lab5.py.)

def dot_product(u, v):

def norm(v):

Later, when you need to actually manipulate vectors, note that we have also provided methods for adding two vectors (vector_add) and for multiplying a scalar by a vector (scalar_mult). (See the API, below.)

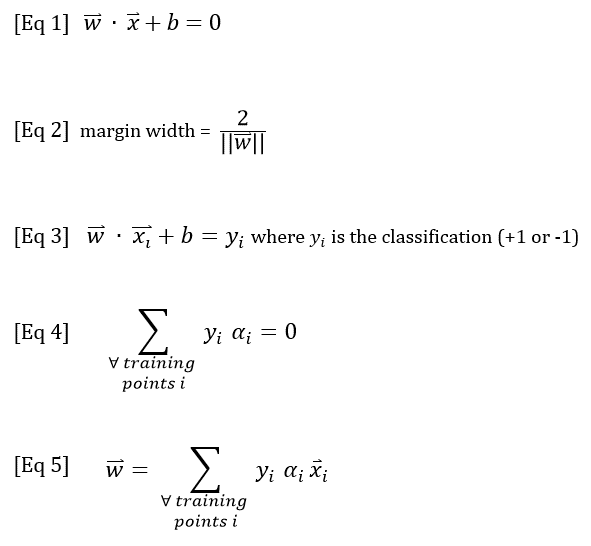

SVM Equations (reference only)

Next, we will use the following five SVM equations. Recall from recitation that Equations 1-3 define the decision boundary and the margin width, while Equations 4 & 5 can be used to calculate the alpha (supportiveness) values for the training points.

For more information about how to apply these equations, see:

- Dylan's guide to solving SVM quiz problems

- Robert McIntyre's SVM notes

- SVM notes, from the Reference material and playlist

Using the SVM Boundary Equations

We will start by using Equation 1 to classify a point with a given SVM, ignoring the point's actual classification (if any). According to Equation 1, a point's classification is +1 when w·x + b > 0, or -1 when w·x + b < 0. If w·x + b = 0, the point lies on the decision boundary, so its classification is ambiguous.

First, evaluate the expression w·x + b to calculate how positive a point is. svm is a SupportVectorMachine object, and point is a Point (see the API below for details).

def positiveness(svm, point):

Next, classify a point as +1 or -1. If the point lies on the boundary, return 0.

def classify(svm, point):

Then, use the SVM's current decision boundary to calculate its margin width (Equation 2):

def margin_width(svm):

Finally, we will check that the gutter constraint is satisfied. The gutter constraint requires that positiveness be +1 for positive support vectors, and -1 for negative support vectors. Our function will also check that no training points lie between the gutters -- that is, every training point should have a positiveness value indicating that it either lies on a gutter or outside the margin. (Note that the gutter constraint does not check whether points are classified correctly.) Implement check_gutter_constraint, which should return a set (not a list) of the training points that violate one or both conditions:

def check_gutter_constraint(svm):

Using the Supportiveness Equations

To train a support vector machine, every training point is assigned a supportiveness value (also known as an alpha value, or a Lagrange multiplier), representing how important the point is in determining (or "supporting") the decision boundary. The supportiveness values must satisfy a number of conditions, which we will check below.

First, implement check_alpha_signs to check each point's supportiveness value. Each training point should have a non-negative supportiveness. Specifically, all support vectors should have positive supportiveness, while all non-support vectors should have a supportiveness of 0. This function, like check_gutter_constraint above, should return a set of the training points that violate either of the supportiveness conditions.

def check_alpha_signs(svm):

Implement check_alpha_equations to check that the SVM's supportiveness values are consistent with its boundary equation and the classifications of its training points. Return True if both Equations 4 and 5 are satisfied, otherwise False.

def check_alpha_equations(svm):

Classification Accuracy

Once a support vector machine has been trained -- or even while it is being trained -- we want to know how well it has classified the training data. Write a function that checks whether the training points were classified correctly and returns a set containing the training points that were misclassified, if any.

def misclassified_training_points(svm):

Training a support vector machine

So far, we have seen how to calculate the final parameters of an SVM (given the decision boundary), and we've used the equations to assess how well an SVM has been trained, but we haven't actually attempted to train an SVM.

In practice, training an SVM is a hill-climbing problem in alpha-space using the Lagrangian. There's a bit of math involved, and the equations are typically solved using Sequential Minimal Optimization (SMO), a type of quadratic programming (which is similar to linear programming, but more complicated).

...but if that sounds scary, don't worry -- we've provided some code to do most of it for you!

What train_svm does

In train_svm.py, we've provided some SVM-solving code, originally written by past 6.034 student Crystal Pan. Here is approximately what the function train_svm (in train_svm.py) does:

- [todo]

We've also provided visualization code in display_svm.py, originally written by past 6.034 student Kelly Shen. This code is automatically called by train_svm, although you can adjust the parameters or disable it by calling train_svm with additional parameters.

Note that the visualization requires the Python module Matplotlib. If you don't have Matplotlib installed, you can:

- install Matplotlib (just Google it)

- install a stand-alone Python distribution that comes with Matplotlib and won't interfere with your current Python installation (e.g. Anaconda or Python(x,y))

- work with a friend, running the code on their computer

- use an Athena cluster computer (the Athena version of Python 2 should include Matplotlib)

- use Athena locally via ssh -X (which enables Athena to display GUI windows, including colored plots, on your screen):

ssh -X username@athena.dialup.mit.edu

- comment out the line "from display_svm import create_svm_graph" in train_svm.py and run train_svm with show_graph=False (but then you won't get to watch the SVM being trained!)

Your coding task: Update SVM from alpha values

Your task is to take the alpha values determined by SMO and use them to determine the normal vector w, the offset b, and the support vectors. Implement the function update_svm_from_alphas, which takes in a SupportVectorMachine and a kernel function (such as dot_product), then uses the SVM's training points and alpha values to update its w, b, and support_vectors. Return the updated SVM. (If the input SVM already has w, b, and/or support_vectors defined, ignore them and overwrite them. For this function, you may assume that the SVM is 2-dimensional.)

def update_svm_from_alphas(svm, kernel_fn=dot_product):

Once you've implemented that, you should be able to train an SVM on a dataset and visualize the results!

Parameters for train_svm

The function train_svm has only one required arguments:

- training_points: A list of training points as Point objects.

It also has many optional arguments (with defaults given):

- [todo]

One more task: Multiple-choice questions about SVM training (based on train_svm)

[todo]

If you want to do more...

If you want to learn more about training SVMs, the following resources (mostly beyond the scope of 6.034) may be helpful:

- SVM lecture notes from a machine-learning class at NYU

- paper on Sequential Minimal Optimization

- SVM notes, from the Reference material and playlist

- SVM slides, from the Reference material and playlist

- Wikipedia articles on SMV math and Sequential Minimal Optimization

Other possible extensions of this lab include:

- Define some non-linear kernel functions and try training with them.

- Extend the visualization code to display non-linear kernels.

- [todo]

If you do something cool, we'd love to see it! Feel free to send your code and/or results to 6.034-2016-staff@mit.edu (ideally with some sort of documentation). Your code could even end up in a future version of this lab! (With your permission, of course.)

Survey

Please answer these questions at the bottom of your lab7.py file:

- NAME: What is your name? (string)

- COLLABORATORS: Other than 6.034 staff, whom did you work with on this lab? (string, or empty string if you worked alone)

- HOW_MANY_HOURS_THIS_LAB_TOOK: Approximately how many hours did you spend on this lab? (number or string)

- WHAT_I_FOUND_INTERESTING: Which parts of this lab, if any, did you find interesting? (string)

- WHAT_I_FOUND_BORING: Which parts of this lab, if any, did you find boring or tedious? (string)

- (optional) SUGGESTIONS: What specific changes would you recommend, if any, to improve this lab for future years? (string)

(We'd ask which parts you find confusing, but if you're confused you should really ask a TA.)

When you're done, run the online tester to submit your code.

API

Support Vector Machines

The file svm_api.py defines the Point and SupportVectorMachine classes, as well as some helper functions for vector math, all described below.

Point

A Point has the following attributes:

- point.name, the name of the point (a string).

- point.coords, the coordinates of the point, represented as a vector (a tuple or list of numbers).

- point.classification, the classification of the point, if known. Note that classification (if any) is a number, typically +1 or -1.

- point.alpha, the supportiveness (alpha) value of the point, if assigned.

The Point class supports iteration (to iterate through the coordinates).

SupportVectorMachine

A SupportVectorMachine is a classifier that uses a decision boundary to classify points. The decision boundary is defined by a normal vector w (a vector, represented as a list or tuple of coordinates), and an offset b (a number). The SVM also has a list of training points and optionally a list of support vectors. You can access its parameters using these attributes:

- svm.w, the normal vector w.

- svm.b, the offset b.

- svm.training_points, a list of Point objects with known classifications.

- svm.support_vectors, a list of Point objects that serve as support vectors for the SVM. Every support vector is also a training point.

To set an SVM's decision boundary, you can modify the parameters w and b directly, or use

- svm.set_boundary(new_w, new_b) to update w and b.

To instantiate a new SVM, use the constructor:

- my_svm = SupportVectorMachine(w, b, training_points, support_vectors)

The SupportVectorMachine class also has a .copy() method for making a deep copy of an SVM.

Helper functions for vector math

vector_add: Given two vectors represented as iterable vectors (lists or tuples of coordinates) or Points, returns their vector sum as a list of coordinates.

scalar_mult: Given a constant scalar and an iterable vector (as a tuple or list of coordinates) or a Point, returns a scaled list of coordinates.